Abstract

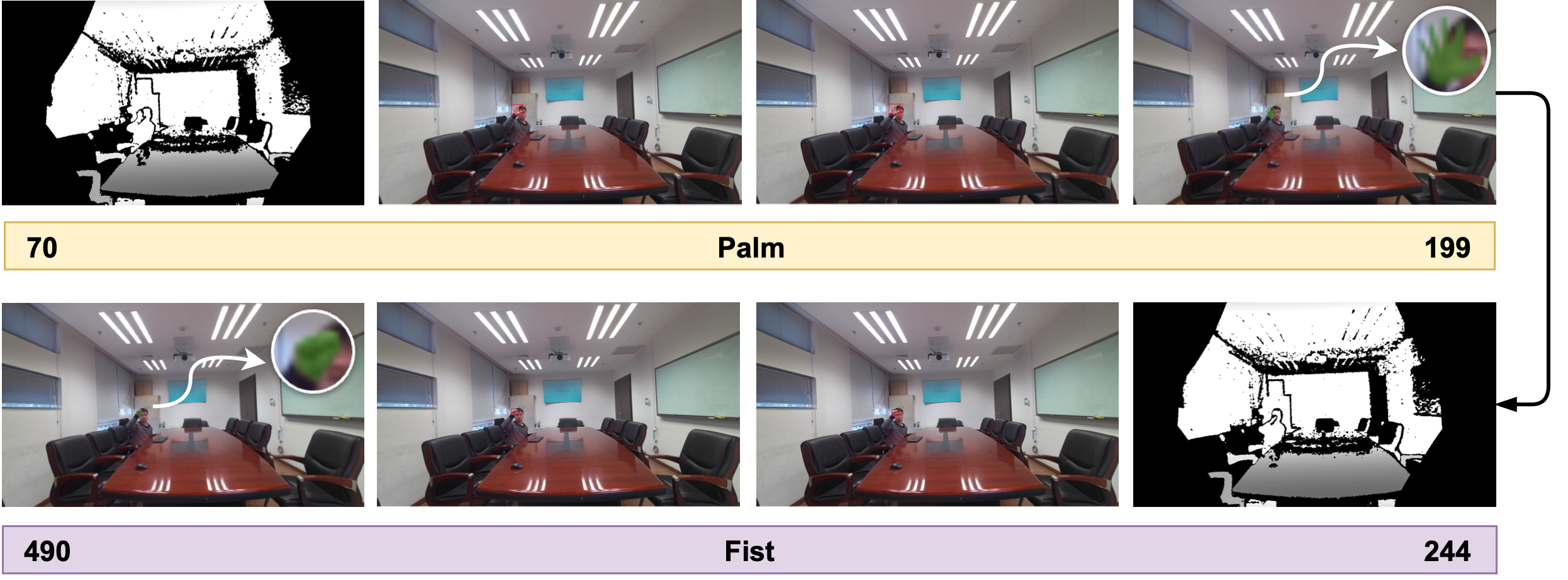

An example of three types of annotations based on LD-ConGR+ for three tasks: continuous gesture recognition, hand detection and hand segmentation.

we present LD-ConGR+, a new RGB-D video dataset designed to facilitate research and application in gesture recognition, detection and segmentation for long-distance human-computer interaction scenarios (e.g., meetings and smart room), which has been largely overlooked before. Our LD-ConGR+ is distinctive from existing gesture datasets in three primary aspects. Firstly, it offers long-distance gestures up to 4m away from the camera, while existing datasets typically collect gestures within 1m from the camera. Secondly, LD-ConGR+ provides fine-grained annotations, including gesture categories and temporal localization for continuous gesture recognition, as well as hand bounding boxes and segmentation masks for potential long-distance hand-centric research. Thirdly, the dataset features high-quality videos, captured at high resolution (1280×720 for color streams and 640×576 for depth streams) and high frame rate (30 fps). On top of LD-ConGR+, we conducted extensive experiments and studies to evaluate our proposed baseline models on continuous gesture recognition and benchmark isolated gesture recognition, long-distance hand detection, and long-distance hand segmentation approaches. We anticipate that LD-ConGR+, together with our proposed baseline and evaluation analysis, will contribute to the advancement of long-distance gesture recognition as well as more general long-distance hand analysis research.

Highlights:

Long-distance

As opposed to existing gesture recognition datasets in which the hands are recorded in less than 1 meter, we record gestures at distances of 1 to 4 meters from the subject to the camera.Diversity

The videos in LD-ConGR+ are collected from 33 voluntary participants who dress differently with various hand movement speeds in different illumination conditions.High-quality

The color and depth streams are captured synchronously at 30 fps with resolutions of 1280x720 and 640x576, respectively.Fine-grained annotations

Different fine-grained annotations for LD-ConGR+ makes it applicable for multiple research tasks on hand analysis, including isolated/continuous gesture recognition, hand detection and hand segmentation.Comparison and Statistics

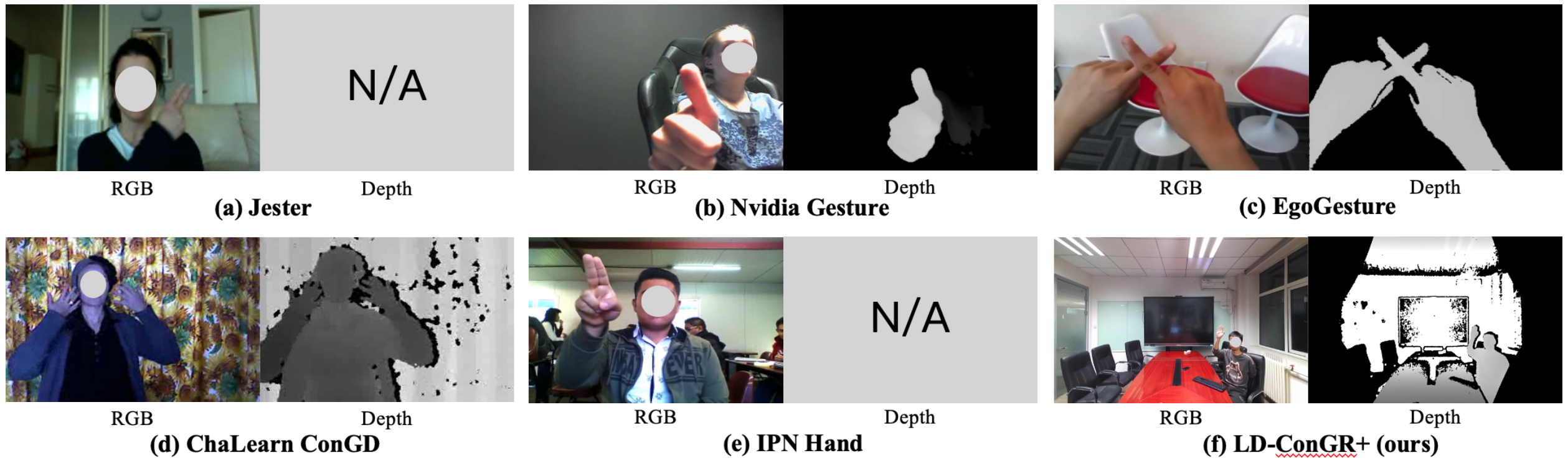

Example frames from gesture recognition datasets.

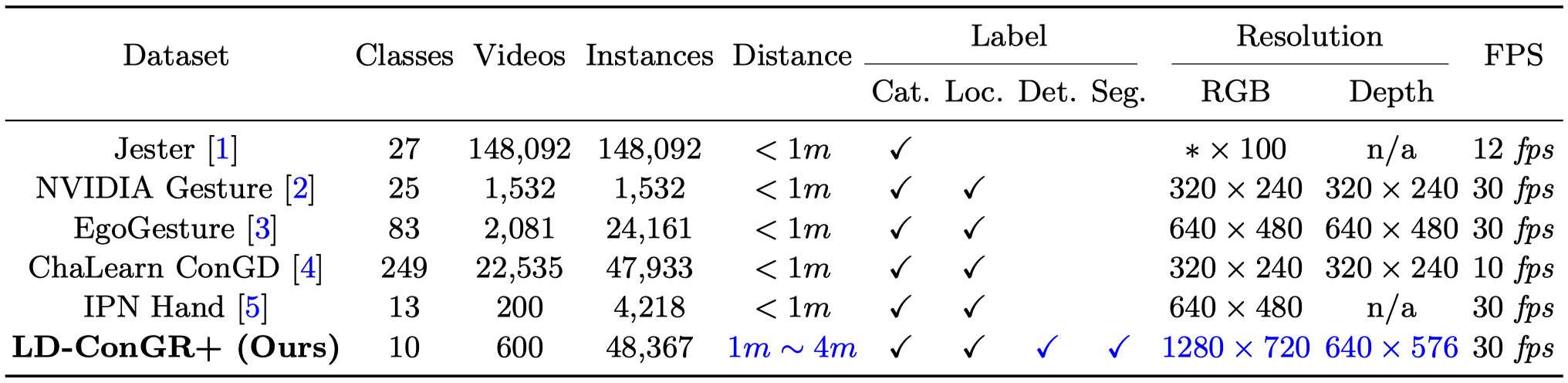

Comparison of LD-ConGR+ and popular gesture recognition datasets.

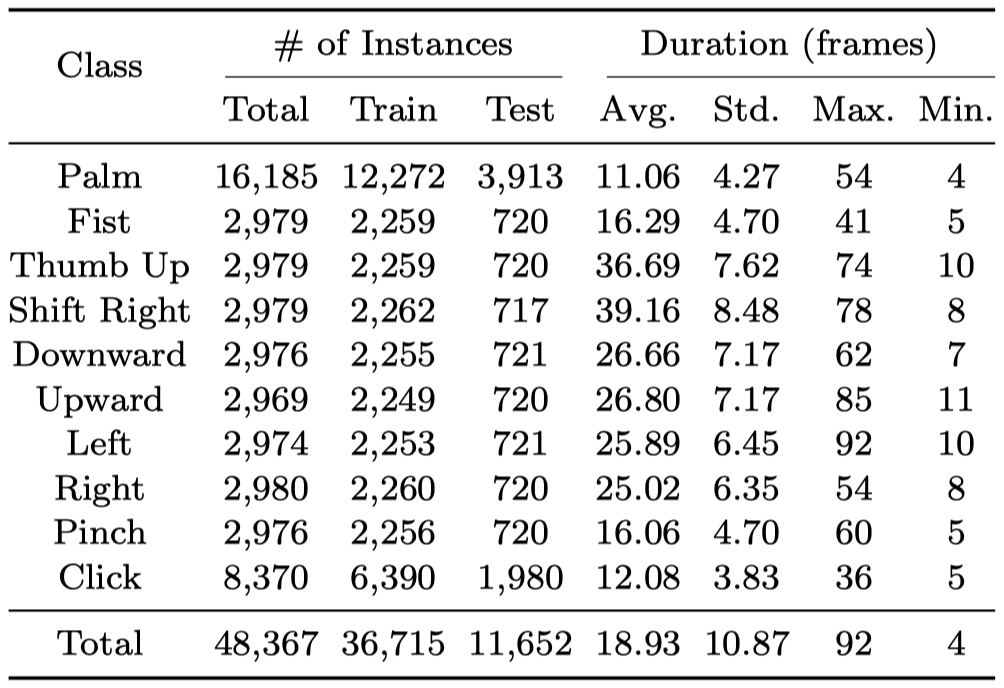

Statistics of the proposed LD-ConGR+ dataset.

Experiments

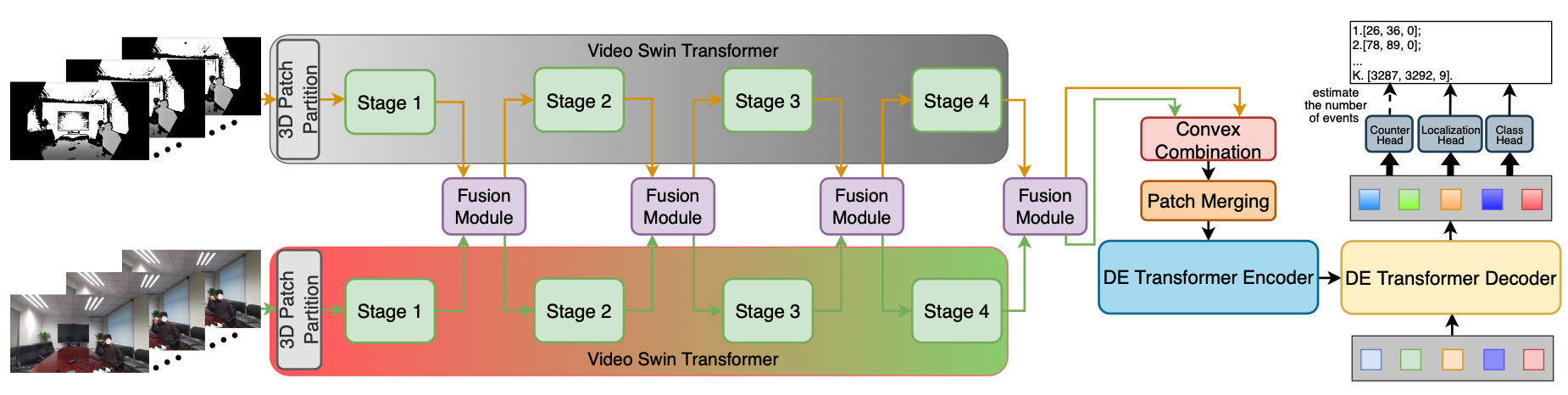

Architecture of our newly proposed method MMFT.

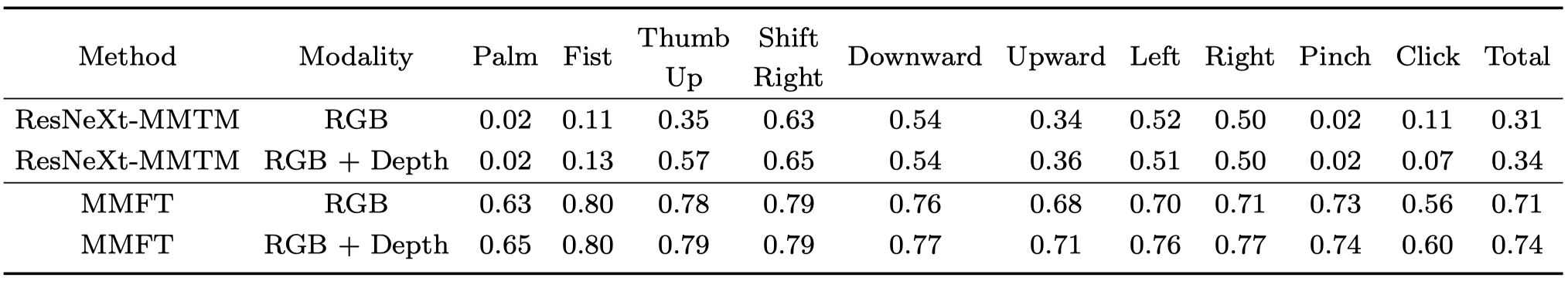

Results of our two baseline methods (ResNeXt-MMTM is proposed in our conference paper).

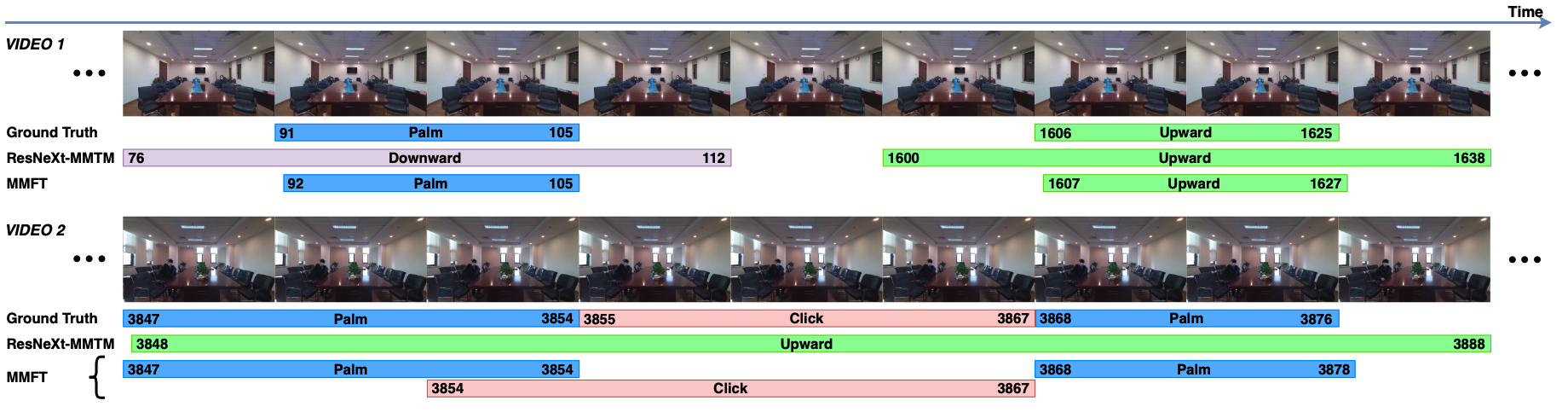

Qualitative results on continuous gesture recognition task of LD-ConGR+.

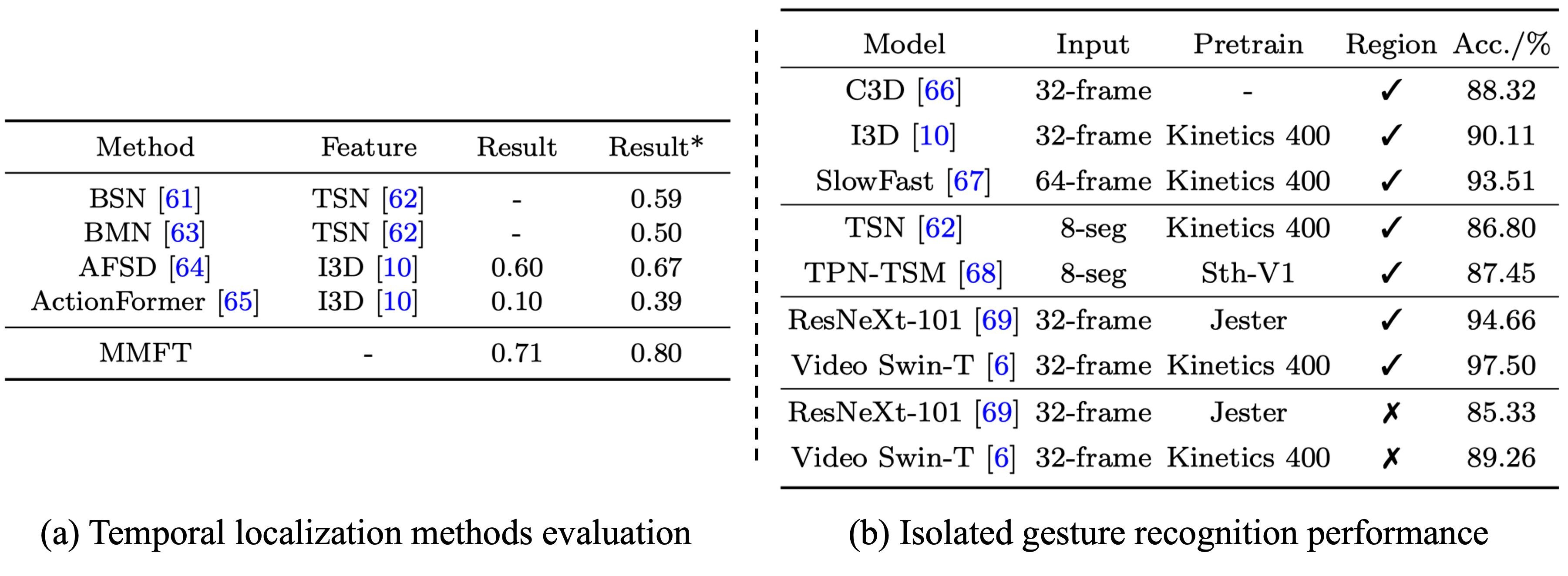

Evaluation of temporal localization methods on continuous gesture recognition and action recognition methods on isolated gesture recognition.

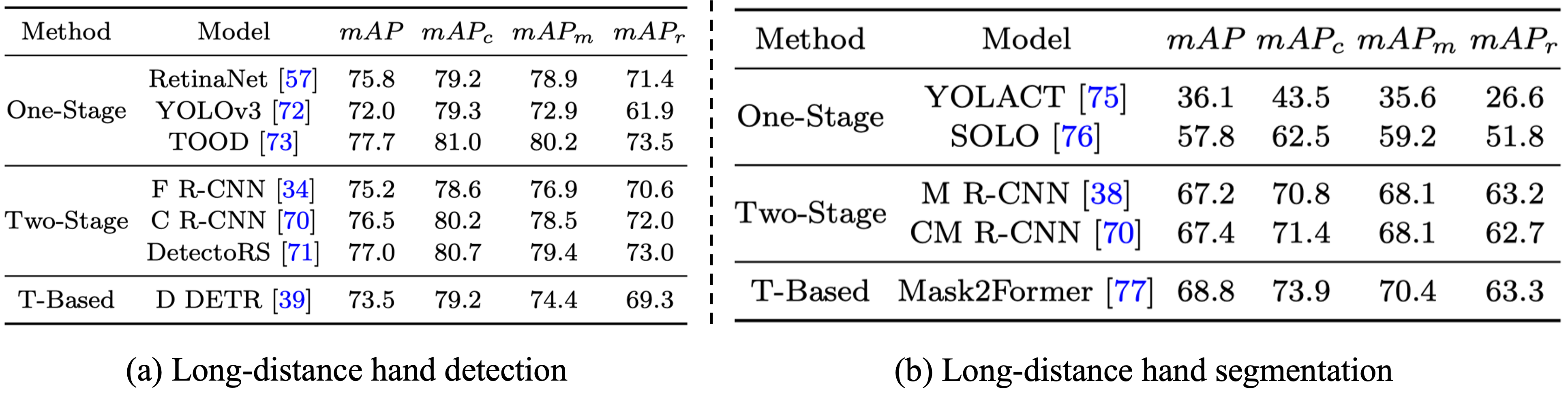

Long-distance hand detection/segmentation performance on LD-ConGR+.